In case you're new to T-SQL Tuesday, it is a blogging party hosted by a different person each month. It’s a creation of Adam Machanic (b|l|t), and it’s been going on for a very long time - we're currently on #101.

LadyRuna's Essential SQL Server Tools

As a consultant, I am often in the situation where I cannot make changes to the system I am using, so I frequently have to work with whatever they provide. Sometimes I'm lucky and they provide a few of my favorite tools, and other times not so much. Therefore, the tools I'm listing are the ones most frequently available to me in my consulting gigs.Notepad ++

I hear you laughing, but think about it: you're tasked to create an SSIS package to import a flat file into your SQL server. Before you do anything in SSIS, you have to look a the file so you know whether the package will require any data transformations prior to loading the content into the SQL table.

If you open the file in Excel, it may automatically change the data types incorrectly (a good example is changing an account number that begins with 00 to an integer. That action removes the leading zeros, essentially modifying the account number's value), or it may reformat values in an undesirable format (changing phone number to scientific notation). If you open it in Notepad++, you will see exactly what the source system output and you will be able to accurately map all of the data correctly the first time.

If you open the file in Excel, it may automatically change the data types incorrectly (a good example is changing an account number that begins with 00 to an integer. That action removes the leading zeros, essentially modifying the account number's value), or it may reformat values in an undesirable format (changing phone number to scientific notation). If you open it in Notepad++, you will see exactly what the source system output and you will be able to accurately map all of the data correctly the first time.

Notepad++ also offers context highlighting for various programming languages, a spell-checker, and prompts for frequently typed words (the list seems to grow the more you use it). Notepad++ highlights pairs of parenthesis, brackets, and braces; toggles comments; gracefully handles indents; and has fantastic searching capabilities. I like it enough that I find I use Notepad++ more than SSMS for many coding activities.

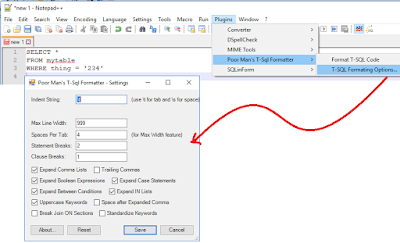

Poor Man's T-SQL Formatter

This is a free open-source downloadable T-SQL formatter available for SSMS, Visual Studio, and Notepad++ . It provides enough formatting options to satisfy most of the T-SQL formatting standards I've seen. If you haven't tried it yet, please download it and see for yourself.

Poor SQL

This is a free, online SQL formatter. Since there is nothing to download or install, I use this to format my SQL when I'm working in an environment where I don't have access to any other formatter and I want my code to be formatted consistently and / or in compliance with the customer's style. I have not yet encountered a situation where this option was blocked.

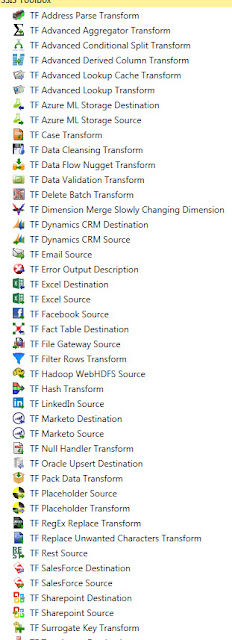

Task Factory

|

| Some of the Task Factory Components |

Task Factory has over 60 SSIS components which are optimized to run faster than the native SSIS components. The Task Factory components handle many tasks which previously could only be handled using custom script components - allowing users to avoid the nightmare of debugging and rewriting script components for different environments.

BI xPress

Yet another Pragmatic Works product that I wish I knew about years ago is BI xPress. It includes auditing and notification frameworks which can easily be applied to any SSIS package to provide consistent and efficient auditing of SSIS package runs.

Once the auditing framework has been applied to a package, the SSIS Monitoring shows details about each run - including the values of variables at each component and, if the package is still running, which step it is currently running. I've found it's great for debugging packages that "worked on my machine" but aren't working properly in the SSISDB. I can see everything about the package in the SSIS Monitor in a view similar to what I'd see when running the package in Visual Studio.

There are more features to BI xPress than that, but in my experience those features are the ones I go to most often.

DOC xPress

One more Pragmatic Works product I wish the companies I worked for years ago had is DOC xPress. Its main function is to create documentation of SQL Server, SSAS, SSIS, SSRS, Excel, Oracle, and other database-based systems. It does this by pulling metadata about each of the objects and compiling it into a single cohesive unit. The documentation can be output into CHM help file, HTML, RTF or Word Documents.

I've found this is a fantastic device for learning about a new database system because all of the information is available in one spot. If a change occurs on a database that has been documented, after taking a new snapshot, the "before" version of the database can be compared to the "current" version to see what has changed.

DOC xPress even has a "lineage" feature which allows users to answer the question "Where did this field originate?". This means you can potentially pick a field in a table in the SSAS cube, find the SSIS package that loaded the cube, and trace that field back to the source database where it originated. Tracing the "lineage" with this tool is many times more efficient than the old fashioned method of getting a pre-dawn phone call about a process failure because someone made a change and didn't find all of the downstream effects of that change.